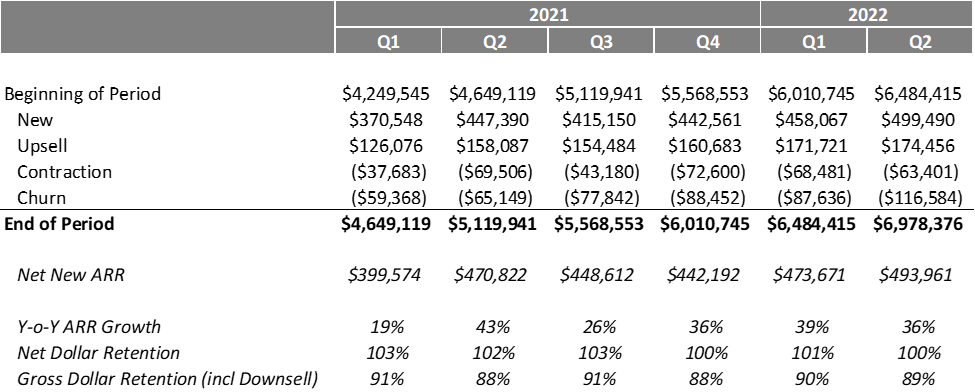

Over the past decade, the “ARR waterfall” or “SaaS revenue waterfall” has become a mainstay within the landscape of SaaS metrics and reporting. This is hardly surprising: operators, investors, and analysts all need a commonly accepted standard for assessing the topline performance of SaaS businesses; and the ARR Waterfall conveys so much in only a few lines (for this reason, I’d submit that the ARR waterfall is essentially the haiku of SaaS reporting…but I digress). As in the example below, an ARR waterfall neatly ties together new bookings, upsell, contraction, and churn into a structured, easily digestible story around the surprisingly complex question of how much net Annual Recurring Revenue (ARR) a business adds in each period.

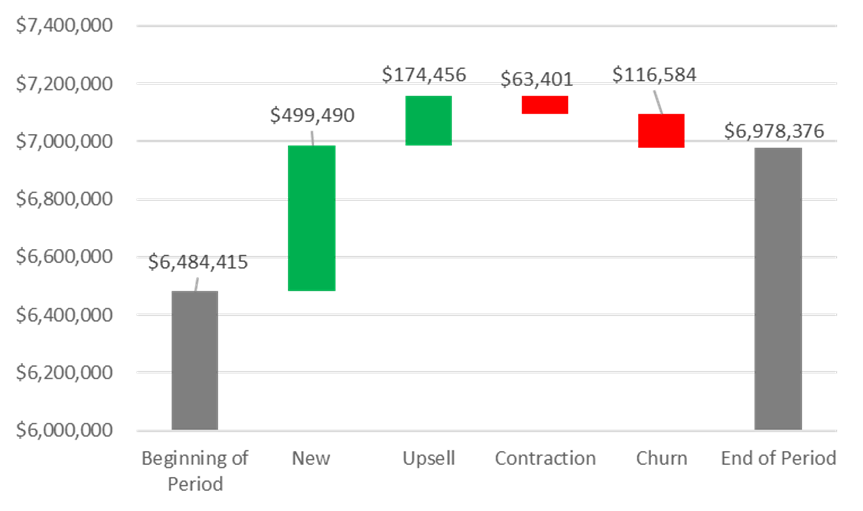

We also like a purely graphical / summary representation of this table, which is likely the source of the term “waterfall:”

This all works well in a prototypically tidy SaaS business: clients pay a specified amount for subscriptions to use a SaaS solution over a designated period, renewing that commitment at regular intervals. These renewals typically take place on an annual or monthly basis (or do NOT take place, resulting in “churn” or “drops”). Likewise, this quintessential SaaS business tends to offer graduated packages (“Good / Better / Best”) of its solution and/or allows customers to add or remove modules on top of a base subscription (“Core + More”). These add-ons lead to ARR expansion (aka “upsell”) but can also result in contraction when customers elect to scale back their licensed capabilities (aka “downsell”). In this scenario, new bookings, upsell, contraction, and churn all tend to be discrete, knowable, and quantifiable figures…in other words, comfortingly black-and-white.

The dirty secret within many SaaS businesses, however, is that the world is a lot messier and more complicated than ARR waterfalls would make it appear. Below are just a few examples of common nuances, along with the inevitable Good, Bad, and Ugly that each represents to SaaS providers trying to craft a tidy ARR waterfall — and, finally, some closing thoughts around how to manage such messiness:

· What if your business offers usage-based pricing, whereby clients get billed based on an amount of actual usage over a given period? Note: usage can be measured in countless ways (number of users, volume of transactions processed, database calls executed, and others).

· What if a benefit of your SaaS offering is the ability for clients to terminate contracts at their convenience and/or that these are evergreen contracts with no set renewal date?

· What if there is a mix of pure subscriptions (where clients manage their use of the software) along with technology-enabled reoccurring managed services (where the vendor provides admin and management of the solution on customers’ behalf)?

Before going any further, I’d offer that these situations are surprisingly common in the SaaS world. Many SaaS businesses, particularly those that Lock 8 invests in, offer such contractual nuances that go against traditional commonly accepted SaaS “best practices.” Although SaaS purists tend to poo-poo anything less than straight subscription revenue, customers often appreciate and ascribe value to these nuances (as Jason Lemkin wrote compellingly about here); and smart companies make the conscious decision to manage the tradeoffs described above. Some of the best companies we’ve worked with do exactly that. But…there is a catch. If your revenue model deviates from pure subscriptions, then it’s important to take steps to manage and monitor ARR reporting with great intentionality, as follows:

Now for the big finish to this post, please queue audio from TLC’s classic, and former Billboard #1 hit, “Waterfalls.” Per TLC’s timeless wisdom: “Don’t go chasing waterfalls”…instead, carefully Define, Align / Refine, Baseline, and Trend Line your own.

When I joined my first subscription-based software business in 1999, the term Software-as-a-Service wasn’t even a thing. Since then, SaaS has emerged as the dominant form of software delivery, accompanied by quantum leaps in the study and understanding of its underlying business model. Correspondingly, there is a wealth of outstanding available resources that explain the fundamental principles and performance indicators used to evaluate and operate SaaS businesses (including this and this and this, to name only a few). These and countless other sites are invaluable in explaining the metrics that matter in SaaS. But there is an important metric that seems to consistently fly below the radar. While it likely has many names, we call it DROP ALLOWANCE. Drop allowance takes an opaque retention target and brings it to life by applying it to the actual pool of renewing client logos and revenue. The purpose of this post is to examine the concept of drop allowance and to explore some related metrics and their use.

First things first: drop allowance is focused on GROSS CHURN. For good reasons, the SaaS world seems to have increasingly focused in recent years on NET CHURN and the related NET REVENUE RETENTION. While those KPIs can be quite useful, I’d argue GROSS CHURN (and the inversely correlated GROSS RETENTION) is unique in its ability to spotlight and quantify the underlying stickiness of a software solution. Whereas successful upselling can boost NET REVENUE RETENTION and camouflage troubling subscription-drops, there is simply no hiding drops and down-sells with GROSS CHURN. And, although virtually all SaaS businesses set targets for some flavor of churn/retention, seemingly fewer monitor gross churn against an actual drop allowance pool.

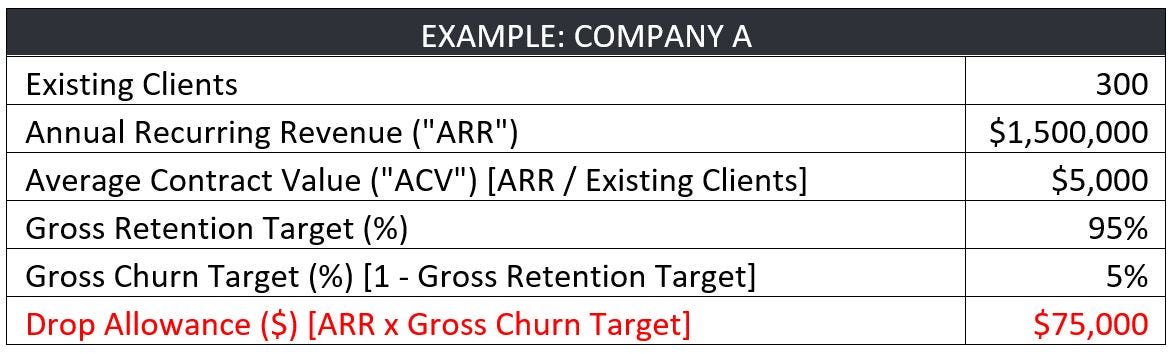

Another point of set-up: the core value of a drop allowance is to translate high-level retention targets into something meaningful and actionable for operators of the business. Churn/retention targets tend to take the form of high-level percentages (e.g. “…our goal is 5% gross churn,” OR ”…we’re planning for 95% gross retention”). The problem is that such percentages tend not to be very meaningful on a day-to-day basis to front-line people responsible for ensuring client renewals. To combat that, drop allowance can be easily calculated, as follows:

Hopefully, this is all straightforward, so let’s dig into an example of a business with the following profile:

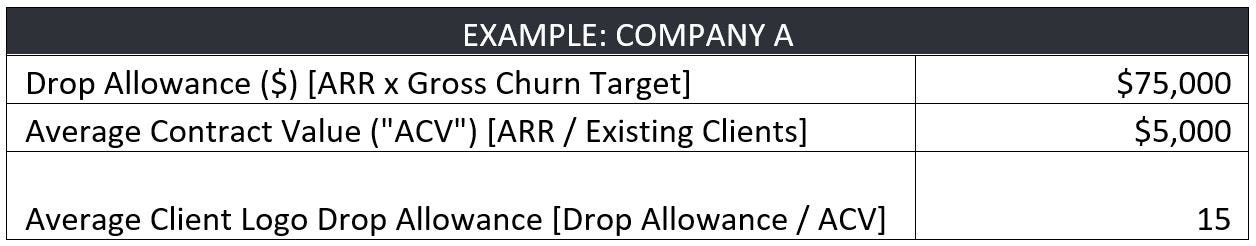

We’ve found that this small act of translating percentage-based objectives into a $-based goal to be helpful to the whole team, and particularly to renewal professionals (such as Client Success Reps, Account Managers, and Salespeople). But that’s just a start. Next, layer in ACV, as follows:

This simple math makes it clear that if we head into the year with 300 paying clients, achieving our retention goal relies on having no more than 15 average-sized cancel their subscriptions across the course of the year. Gulp — this just got real.

Of course, we have large clients and small clients; and this approach also helps quantify the clear-and-present danger of large drops to our company’s health. Although I tend to err on the side of believing every client is worth working to retain, this also raises awareness among the team around where to direct their finite retention-focused resources.

By quantifying the total number of budgeted dropped logos, this also offers an approximation of the acceptable rate of drops across the year. If this business has ZERO seasonality (and hence, no renewal concentration in any given quarter or month), it can theoretically afford to average 1.25 logo drops per month [15 Average Logo Drops Allowed / 12 months]. But we can be even more precise than that, and this is where the real value of metrics related to drop allowance emerges. For the sake of argument, let’s proceed with that simplifying assumption that there is zero seasonality in the business; and that all 300 of the existing clients had been sold evenly across all historical months. We could plot out the renewal pool ($1.5M across 300 clients), drop allowance ($75,000 across 15 logos), and a related monthly budget for each, as follows:

Then we can make it more useful and dynamic by showing these numbers as cumulative across the year, as follows (with shading to help readability). It would look like this:

So far, so good, right? Now…let’s move out of the realm of plans and averages, and into the messiness of the real world.

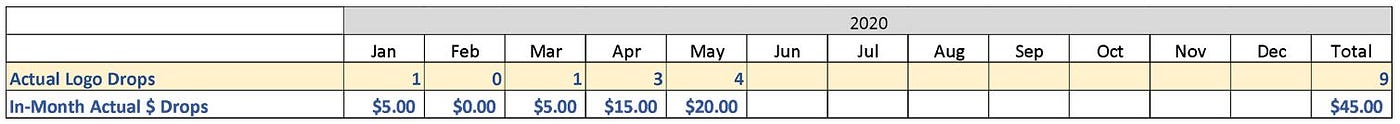

Let’s assume for a minute that we’re through five months of 2020, and we’ve been actively managing and monitoring our renewals, and they look like this:

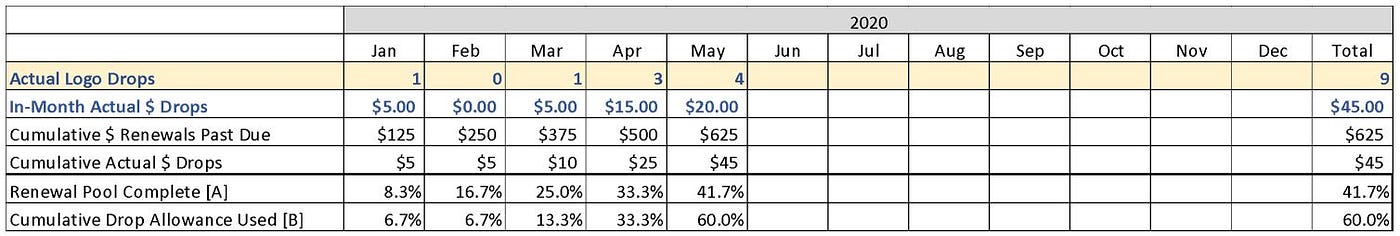

Viewed through this lens, it’s clear that retention has significantly worsened after having started the year well. But without a baseline, it’s hard to deduce much beyond that general assessment; and it’s even harder to quantify when we factor in complexities such as down-sells, seasonality of renewals, and widely variable client-sizes. Instead, leveraging drop allowance can offer a granular budget-versus-actual look every month (or even more real-time) and on a cumulative basis, as follows:

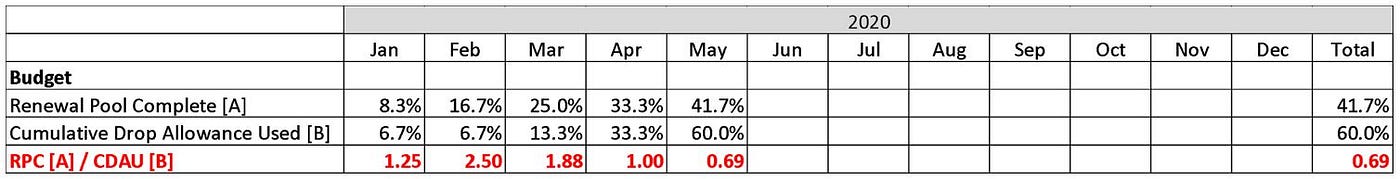

This is where the real pay-off comes. Armed with the above information, we can boil all of this renewal complexity down to one single metric that will tell us how we are performing on renewals, not just against the (maddeningly distant and monolithic) year-end goal, but rather on a rolling basis and relative to the seasonality of our renewal pool. This can be done by establishing a ratio of the RENEWAL POOL COMPLETE (“RPC”) [A] / CUMULATIVE DROP ALLOWANCE USED (“CDAU”) [B]. Using the numbers from above, it looks like this:

Admittedly, that is an awful lot of words, so we like to simply call this the YEAR-TO-DATE RETENTION RATIO. Whatever it’s called, this KPI offers easy-to-understand clarity around gross retention. By looking at this one number, we can know where we stand, relative to what renewals have already come due and which are still outstanding throughout the year. Quite simply, a YTD retention ratio >1.0 means that we are outperforming relative to possible renewals year-to-date; and a ratio below 1.0 should be cause for concern (i.e. we’ve already used up more of the drop allowance than budgeted, relative to renewals past due). Because I’m a visual learner, I like to see all of the above numbers as charts; below is a simple one for the YTD RETENTION RATIO from this example.

This chart helps highlight how YTD retention ratio can serve as an early warning system, signaling the need for intervention. More so than most metrics, it spotlights changes in churn patterns with speed and sensitivity, which can tip-off operators to dig into any number of related variables and levers. Taken together, these can that help inform a number of operating decisions, including: timely personnel changes (e.g. does this problem warrant hiring an AM dedicated to renewals?), systems investments (should be invest in client engagement solution?), and process improvements (e.g. how can we enhance our on-boarding?). I’ll plan to dig into this aspect of things in a future post.

Closing: I know that was a lot of math above, so let’s conclude with a few closing comments.